A Grammar for Reproducible and Painless Extract-Transform-Load Operations on Medium Data

Benjamin S. Baumer http://bit.ly/2pgFSfJ Dartmouth Biomedical Data Science March 16th, 2018

Ben Baumer

Benjamin S. Baumer http://bit.ly/2pgFSfJ Dartmouth Biomedical Data Science March 16th, 2018

We obtained bike usage statistics for April, May, June and July 2014 from Citi Bike’s website (https://www.citibikenyc.com/system-data). This dataset contains start station id, end station id, station latitude, station longitude and trip time for each bike trip. 332 bike stations have one or more originating bike trips. 253 of these are in Manhattan while 79 are in Brooklyn (left panel of Figure 1). We processed this raw data to get the number of bike trips between each station pair during morning rush hours. –Singhvi, et al. (2015)

citibike databasebikes objectlibrary(citibike)

bikes <- etl("citibike",

dir = "~/dumps/citibike/",

db = src_mysql_cnf("citibike"))bikes %>%

etl_update(years = 2014, months = 4:7)trips <- bikes %>%

tbl("trips")

trips %>%

group_by(Start_Station_ID) %>%

summarize(num_trips = n()) %>%

filter(num_trips >= 1) %>%

arrange(desc(num_trips)) %>%

collect()## # A tibble: 332 x 2

## Start_Station_ID num_trips

## <dbl> <dbl>

## 1 519. 50316.

## 2 521. 49511.

## 3 293. 45391.

## 4 497. 45154.

## 5 426. 40046.

## 6 435. 37542.

## 7 285. 36074.

## 8 499. 33849.

## 9 151. 33776.

## 10 444. 33663.

## # ... with 322 more rowsWe focused on the month of September, 2013; i.e. the peak month of the usage in 2013. Therefore, the final sample consists of 237,600 records (330 stations × 24 hours × 30 days). –Fagigh-Imani & Eluru (2016)

bikes %>%

etl_update(year = 2013, months = 9)trips %>%

filter(YEAR(Start_Time) == 2013) %>%

group_by(Start_Station_ID,

DAY(Start_Time),

HOUR(Start_Time)) %>%

summarize(N = n(),

num_stations = COUNT(DISTINCT(Start_Station_ID)),

num_days = COUNT(DISTINCT(DAYOFYEAR(Start_Time)))) %>%

collect() %>%

nrow()## [1] 167258

An article about a computational result is advertising, not scholarship. The actual scholarship is the full software environment, code and data, that pro- duced the result. –Claerbout, 1994

1 Version control

2 Literate programming

3 Scriptability

4 All of the above

| “Size” | size | hardware | software |

|---|---|---|---|

| small | < several GB | RAM | R |

| medium | several GB – a few TB | hard disk | SQL |

| big | many TB or more | cluster | Spark? |

Bureau of Transportation Statistics on-time flight data

| R package | timespan | airports | size |

|---|---|---|---|

hflights |

2011 | IAH, HOU | 2.1 MB |

nycflights13 |

2013 | LGA, JFK, EWR | 4.4 MB |

airlines |

1987–2017 | ~350 | ~7 GB |

SELECT, they probably don’t know CREATE TABLE

So you want to write a script…

git, may not be robustIn linguistics, grammar is the set of structural rules governing the composition of clauses, phrases, and words in any given natural language. –Wikipedia

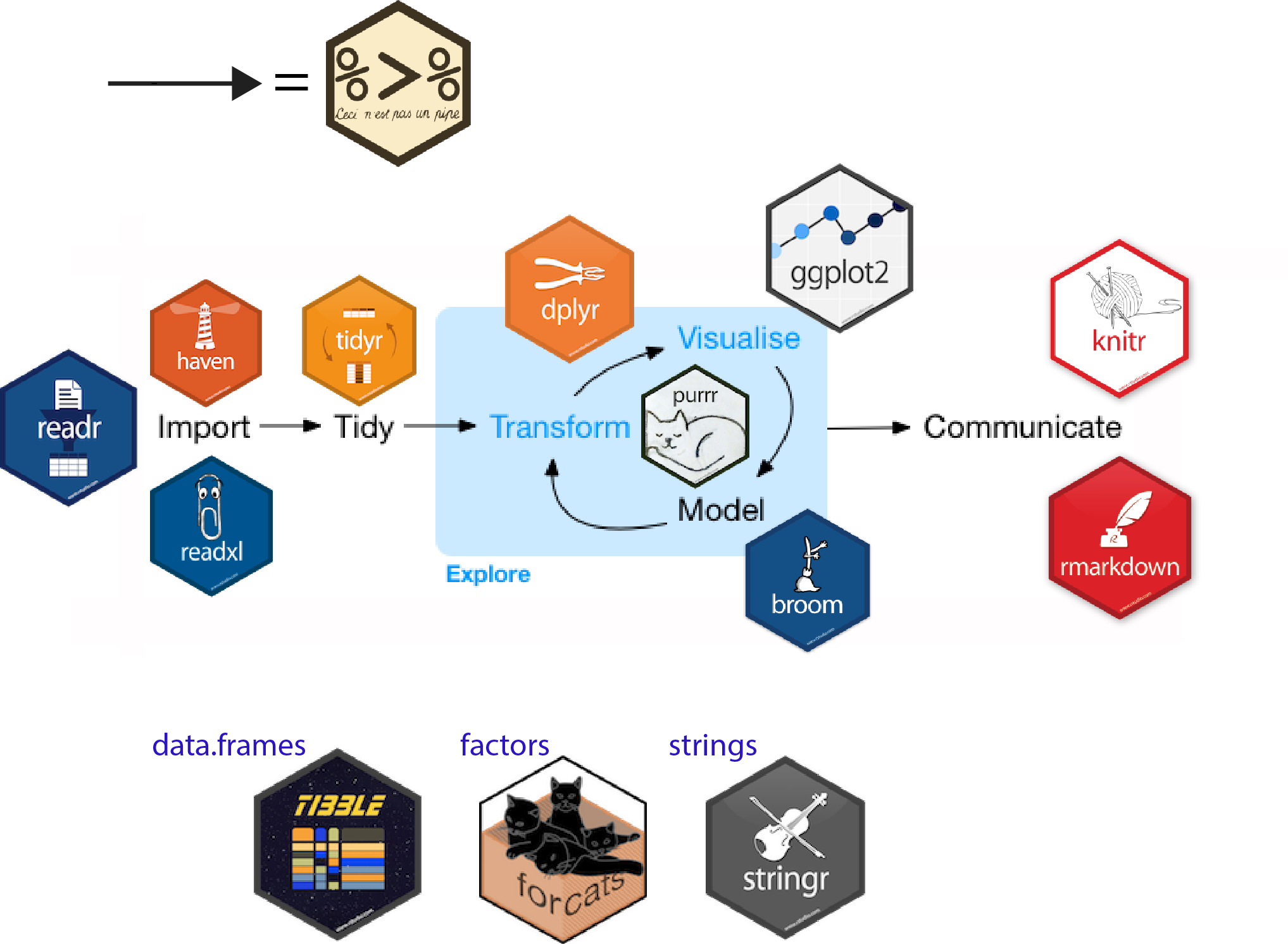

ggplot2 (Wickham, 2009)dplyr: a grammar of data manipulation (Wickham & Francois, 2016)etletl_extract()etl_transform()etl_load()

mtcarslibrary(etl)

cars <- etl("mtcars") %>%

etl_extract() %>%

etl_transform() %>%

etl_load()

cars %>%

tbl("mtcars") %>%

group_by(cyl) %>%

summarize(N = n(), mean_mpg = mean(mpg))## # Source: lazy query [?? x 3]

## # Database: sqlite 3.19.3 [/tmp/RtmpW7JK2C/file70c245bf0158.sqlite3]

## cyl N mean_mpg

## <int> <int> <dbl>

## 1 4 11 26.7

## 2 6 7 19.7

## 3 8 14 15.1airlinessystem("mysql -e 'CREATE DATABASE IF NOT EXISTS airlines;'")etl objectlibrary(airlines)

src_db <- src_mysql_cnf("airlines", groups = "aws")

ontime <- etl("airlines", db = src_db, dir = "~/dumps/airlines") airlines cont’dontime %>%

etl_extract(years = 1987:2017) %>%

etl_transform(years = 1990:1999) %>%

etl_load(years = 1996:1997, months = c(1:6, 9))airlines cont’dontime %>%

tbl("flights") %>%

filter(year == 1996, dest == "BTV") %>%

group_by(carrier) %>%

summarize(num_flights = n(),

avg_delay = mean(arr_delay, na.rm = TRUE)) %>%

arrange(desc(avg_delay))## # Source: lazy query [?? x 3]

## # Database: mysql 5.7.21-0ubuntu0.16.04.1 [bbaumer@127.0.0.1:/airlines]

## # Ordered by: desc(avg_delay)

## carrier num_flights avg_delay

## <chr> <dbl> <dbl>

## 1 UA 665. 11.2

## 2 US 1254. 9.22etl object isdplyr::src_sql object

tempfile)DBI::dbWriteTable methodsclass(cars)## [1] "etl_mtcars" "etl" "src_dbi" "src_sql" "src"etl object hastempdir by defaultsummary(ontime)## files:

## n size path

## 1 365 6.719 GB /home/bbaumer/dumps/airlines/raw

## 2 363 19.536 GB /home/bbaumer/dumps/airlines/load## Length Class Mode

## con 1 MySQLConnection S4

## disco 2 -none- environmentgetS3method("etl_update", "default")## function(obj, ...) {

## obj <- obj %>%

## etl_extract(...) %>%

## etl_transform(...) %>%

## etl_load(...)

## invisible(obj)

## }

## <environment: namespace:etl>getS3method("etl_create", "default")## function(obj, ...) {

## obj <- obj %>%

## etl_init(...) %>%

## etl_update(...) %>%

## etl_cleanup(...)

## invisible(obj)

## }

## <environment: namespace:etl>etletl vignettecreate_etl_package()etl_extract.foo()etl_transform.foo()etl_load.foo()

etl_extractetl_extract.etl_pkgname <- function(obj, ...) {

raw_dir <- attr(obj, "raw_dir")

# write code to download files to raw_dir

# use params in ... to fetch the appropriate files

invisible(obj)

}etl_transformetl_transform.etl_pkgname <- function(obj, ...) {

raw_dir <- attr(obj, "raw_dir")

# use params in ... to fetch the appropriate files

# read the data in

raw_data <- readr::read_csv()

# write code to transform, clean, etc.

load_dir <- attr(obj, "load_dir")

# write a CSV to load_dir

readr::write_csv()

invisible(obj)

}etl_loadetl_load.etl_pkgname <- function(obj, ...) {

load_dir <- attr(obj, "load_dir")

# use params in ... to fetch the appropriate files

# load the CSV(s) into SQL

DBI::dbWriteTable(obj$con, "mytable", path_to_csv)

invisible(obj)

}etl_update() chains ETL operationsetl_cleanup() deletes filessmart_download() only downloads files that don’t already existetl packages!:memory: instead of disk for SQLite?feather for intermediate files?dbWriteTable() methods that read CSVsdplyr and rstats-db developers!!