| race | two_year_recid | risk_score | n |

|---|---|---|---|

| African-American | 0 | High | 345 |

| African-American | 0 | Low | 1169 |

| African-American | 1 | High | 843 |

| African-American | 1 | Low | 818 |

| Caucasian | 0 | High | 106 |

| Caucasian | 0 | Low | 1175 |

| Caucasian | 1 | High | 230 |

| Caucasian | 1 | Low | 592 |

Reward Systems in Sports

Who’s the Fairest of Them All?

Smith College

Sep 27, 2025

I wrote a thing…

On fairness in sports (Baumer 2024)

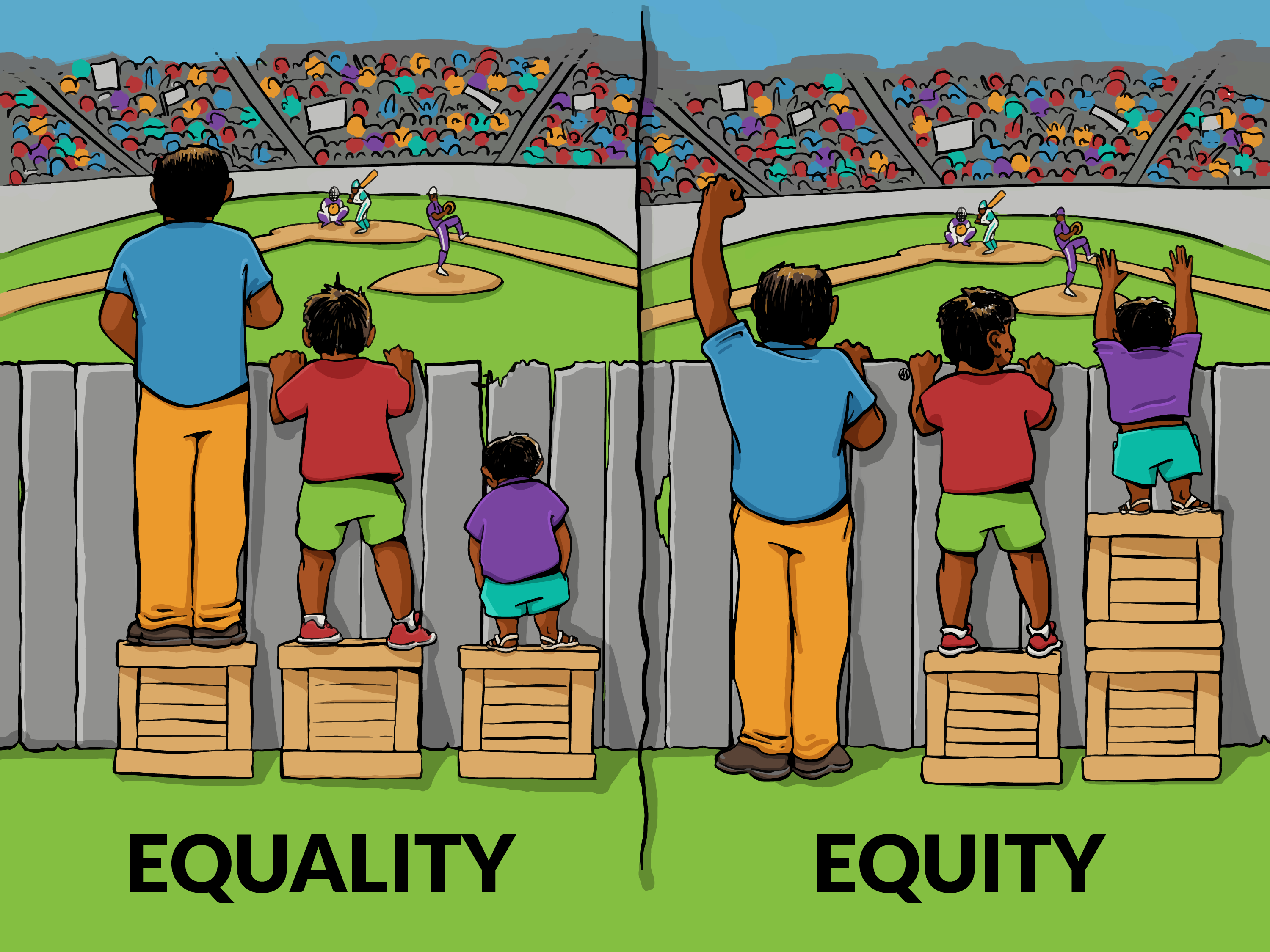

Equality vs. equity

Examples

Fairness in Machine Learning

COMPAS: recidivism scores

- \(Y\): Did they recidivate (within 2 years)

- \(\hat{Y}\): Did COMPAS label them high risk?

- \(A\): Protected class (i.e., race)

ProPublica (Angwin et al. 2016)

Prediction fails differently for Black Defendants

| Error Type | White | African-American |

|---|---|---|

| Labeled Higher Risk, But Didn't Re-Offend | 23.5 | 44.9 |

| labeled Lower Risk, Yet Did Re-Offend | 47.7 | 28.0 |

- ❌ COMPAS fails to demonstrate error rate parity

- ❌ COMPAS fails to demonstrate demographic parity

Northpointe

- 39-page rebuttal (Dieterich, Mendoza, and Brennan 2016)

- ProPublica misrepresents calculations

- ✅ COMPAS demonstrates predictive parity

\[ \Pr{(\neg Y | \hat{Y}, W)} = 0.409 \approx \Pr{(\neg Y | \hat{Y}, B)} = 0.370 \\ \Pr{(Y | \neg \hat{Y}, W)} = 0.288 \approx \Pr{(Y | \neg \hat{Y}, B)} = 0.448 \]

Statistical criteria for fairness

“Dozens” of statistical criteria for fairness boil down to 3:

- Independence

- Separation

- Sufficiency

Independence

- aka disparate impact

\[ \left| \Pr(\hat{Y} | W) - \Pr(\hat{Y} | B) \right| < \epsilon \,, \] where \(\epsilon\) is a small positive constant (typically \(\epsilon < 0.2\))

- ❌ COMPAS FAILS:

\[ \left| 0.348- 0.588 \right| = 0.210 > \epsilon \,, \]

Separation

- aka error rate parity

\[ \left| \Pr(\hat{Y} | Y, W) - \Pr(\hat{Y} | Y, B) \right| < \epsilon \\ \left| \Pr(\hat{Y} | \neg Y, W) - \Pr(\hat{Y} | \neg Y, B) \right| < \epsilon \]

- ❌ COMPAS FAILS:

\[ \left| 0.523 - 0.720 \right| = 0.197 < \epsilon \\ \left| 0.235 - 0.488 \right| = 0.253 > \epsilon \]

Sufficiency

- aka well-calibration

\[ \left| \Pr(Y | \hat{Y}, W) - \Pr(Y | \hat{Y}, B) \right| < \epsilon \,, \]

- ✅ COMPAS PASSES:

\[ \left| 0.591 - 0.630 \right| = 0.039 < \epsilon \]

Kleinberg’s impossibility theorem

Unless the base rates are the same across the groups, you can’t satisfy all three criteria simultaneously (Kleinberg, Mullainathan, and Raghavan 2016)

COMPAS summary

- ProPublica: COMPAS is biased because it lacks error rate parity

- Northpointe: Yeah, but it’s well-calibrated!

- Kleinberg: But since the base rates aren’t the same:

- …you’re both right

- …and you’re both wrong

- So is the algorithm fair or not??

Introducing faireR

- leverages

yardstickandmlr3fairness - computes independence, separation, and sufficiency

- mean absolute difference across groups

- visualizations

- data sets

tidyverse-friendly

Using faireR

Fairness IRL

The narrow view

People with similar qualifications should be treated similarly

- individual fairness

- Ex: the meritocracy, race-blind admissions, etc.

The broad view

People of equal ability and ambition should have similar chances

- fairness across groups

- Ex: all schools should be equally well-funded

The middle view

Adjust for past injustice (that caused the differences in qualifications) at the time of opportunity

- until recently, common interpretation in the US

- Ex: Texas 10% university admission policy (affirmed in 2016 and struck down in 2023)

What does this have to do with sports?

Providing vocabulary, Ex 1

Instead of:

two equal pairs should have as close to an equal chance of winning as possible. (Pollard, Noble, and Pollard 2022)

- We have a broad view of fairness

- We focus on sufficiency (aka well-calibration)

Providing vocabulary, Ex 2

Instead of:

A swimmer with no arms should be able to compete against a swimmer with one or two arms…If two athletes with the same disability compete, the more skilled and fitter should win. (Bartneck and Moltchanova 2024)

- We have a middle view of fairness

- Focus on separation and sufficiency

Providing vocabulary, Ex 3

Instead of:

We present two methods to distribute prize money across gender based on the individual performances w.r.t. gender-specific records. We suppose these “across gender distributions” to be fair, as they suitably respect that women generally are slower than men. (Martens and Starflinger 2022)

- We have a middle view of fairness

- Focus on independence

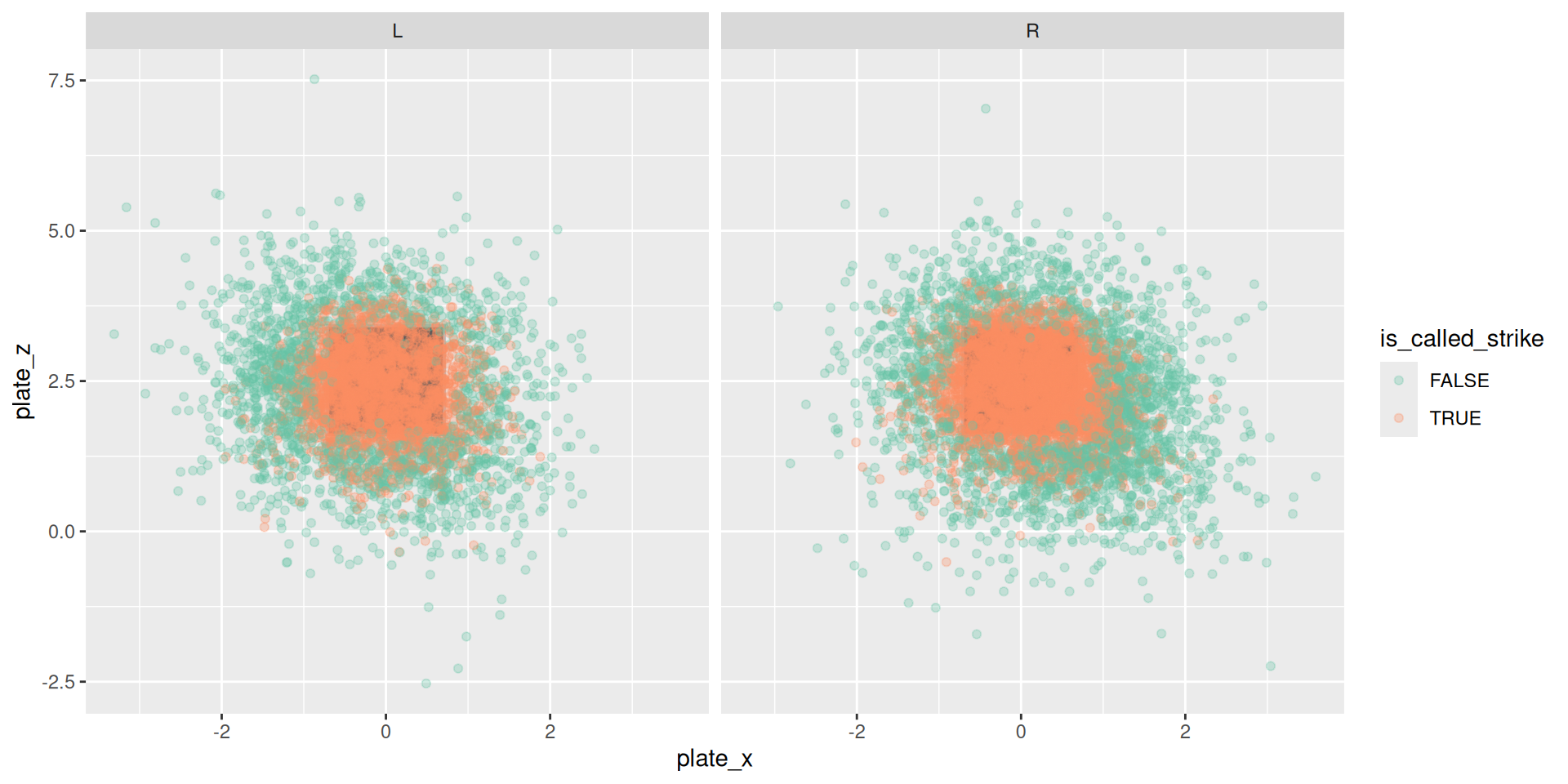

Called strikes in MLB

Are umpires fair to LHH?

- \(Y\): Was it a strike?

- \(\hat{Y}\): Was it called a strike?

- ✅ Independence

- ✅ Separation

- ✅ Sufficiency

A simple Hall of Fame classifier

- See Mills and Salaga (2011) for a more serious attempt…

Is the classifier fair w.r.t. batters and pitchers?

# A tibble: 1 × 3

independence separation sufficiency

<dbl> <dbl> <dbl>

1 0.00322 0.00520 0.0323✅

Is the classifier fair w.r.t. starters and relievers?

hof2025 |>

mutate(

is_pitcher = tSO > 100,

is_reliever = tSV > 50

) |>

filter(is_pitcher) |>

group_by(is_reliever) |>

fairness_cube()# A tibble: 1 × 3

independence separation sufficiency

<dbl> <dbl> <dbl>

1 0.00446 0.275 0.0821❌

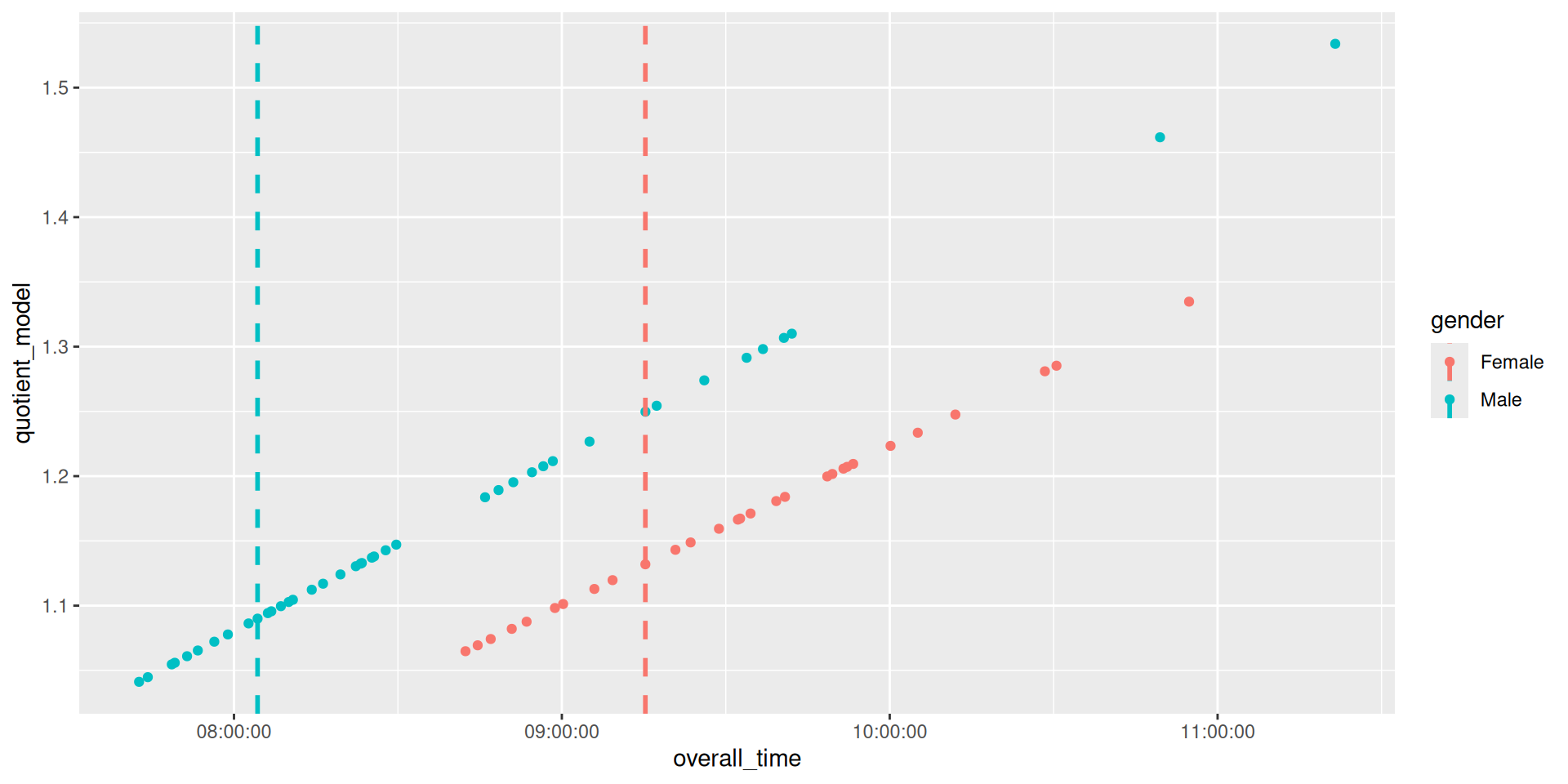

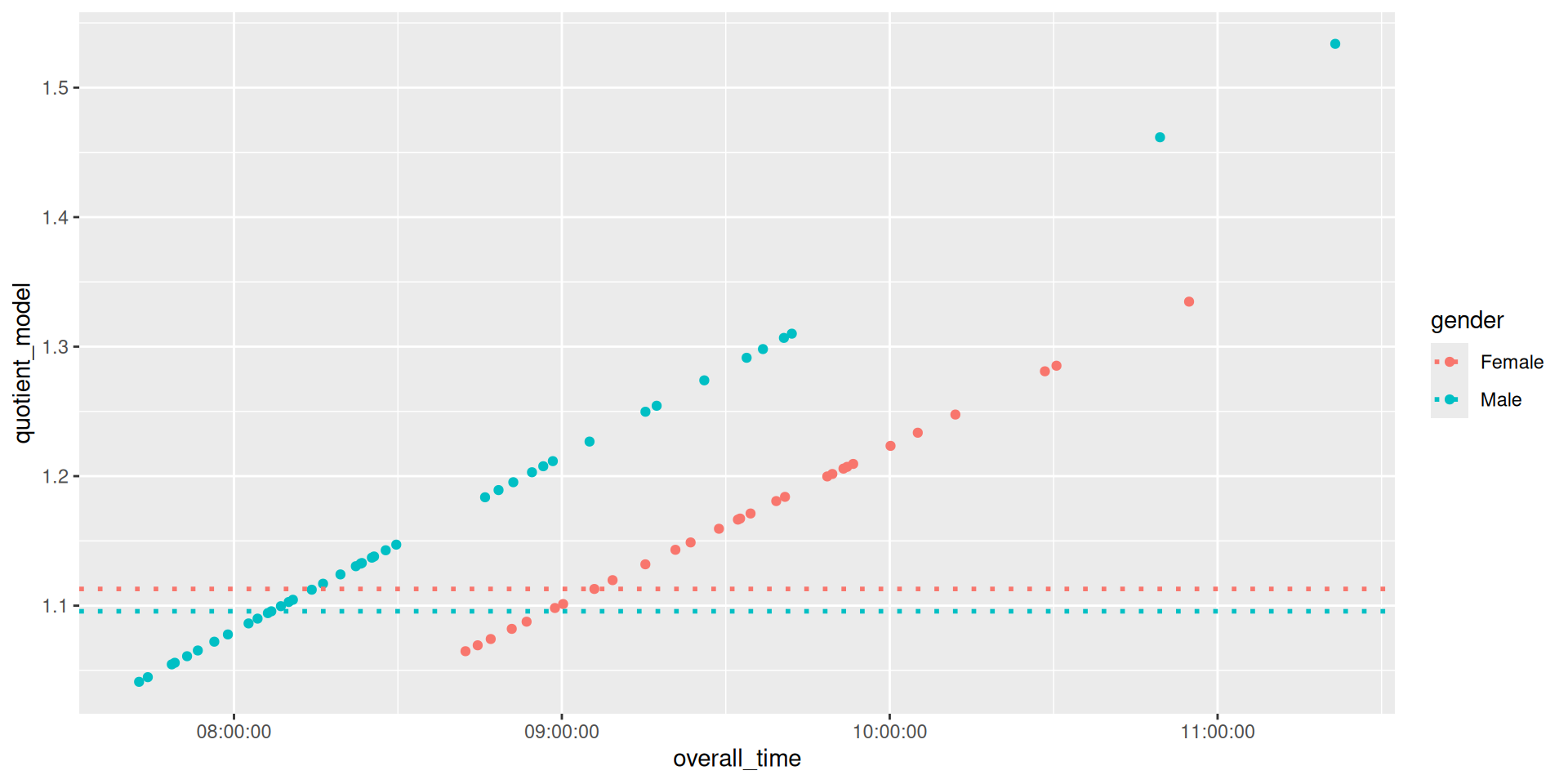

Ironman Texas

Award prizes to:

- Naive: Fastest 20, regardless of gender

- Status Quo: Fastest 10 in each gender division

- Martens: Fastest 20 in relation to gender-specific world records

Texas Ironman naive

Texas Ironman status quo

Texas Ironman proposed

Texas Ironman fairness

# A tibble: 1 × 3

independence separation sufficiency

<dbl> <dbl> <dbl>

1 0.101 NA NAFuture work

- Visualize fairness in 2D

- ROC curves

- Calibration plots

- Visualize fairness in 3D

- Distance metric for overall fairness?

- Is mean absolute difference the best measure?

- Find additional suitable applications