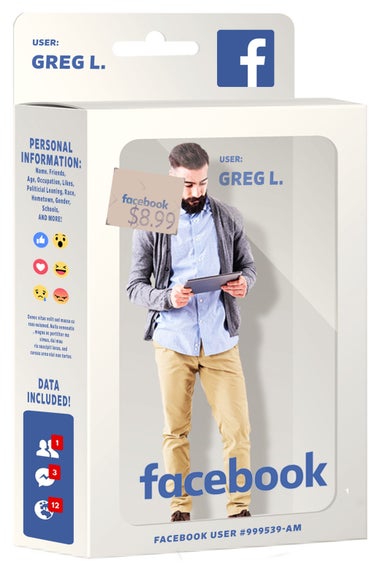

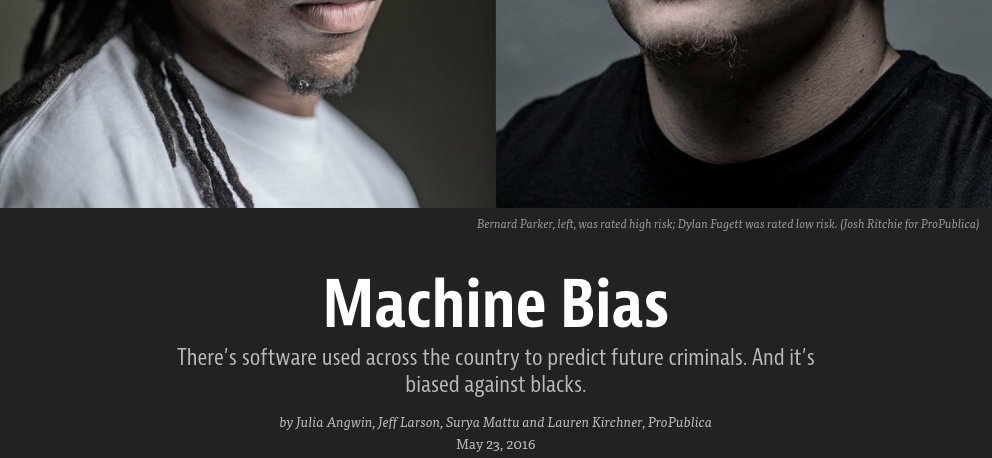

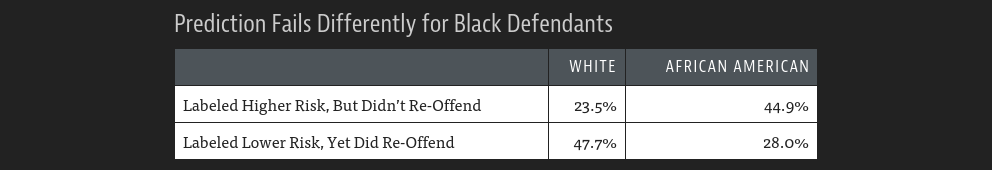

class: center, middle, inverse, title-slide # Ethics ## Data science ethics ### Ben Baumer ### SDS 192</br>Sep 18, 2020</br>(<a href="http://beanumber.github.io/sds192/lectures/mdsr_ethics.html" class="uri">http://beanumber.github.io/sds192/lectures/mdsr_ethics.html</a>) --- ## Outline - Why *data science* ethics? - Why do *we* need to *teach* *data science* ethics? - Concepts in data science ethics - What are we doing about it? --- class: center, middle, inverse # Why *data science* ethics? --- ## Facebook .footnote[https://www.nytimes.com/2019/10/17/business/zuckerberg-facebook-free-speech.html] .pull-left[  ] .pull-right[ > [Facebook] said it would not moderate politicians’ speech or fact-check their political ads because comments by political leaders, **even if false**, were newsworthy and in the public’s interest to hear and debate. ] -- - 10/12: [Warren Dares Facebook With Intentionally False Political Ad](https://www.nytimes.com/2019/10/12/technology/elizabeth-warren-facebook-ad.html) -- - 10/17: [Defiant Zuckerberg Says Facebook Won’t Police Political Speech](https://www.nytimes.com/2019/10/17/business/zuckerberg-facebook-free-speech.html) -- - 10/23: [Zuckerberg Defends Facebook's Currency Plans Before Congress](https://www.nytimes.com/aponline/2019/10/23/us/politics/bc-us-facebook-congress-zuckerberg.html?searchResultPosition=4) -- - 10/25: [Mark Zuckerberg: Facebook Can Help the News Business](https://www.nytimes.com/2019/10/25/opinion/sunday/mark-zuckerberg-facebook-news.html) --- ## Cambridge Analytica .footnote[[Towards a Linguistic Model of Stress, Well-being and Dark Traits in Russian Facebook Texts](https://www.fruct.org/publications/ainl-abstract/files/Pan.pdf)] .pull-left[ - [How Trump Consultants Exploited the Facebook Data of Millions](https://www.nytimes.com/2018/03/17/us/politics/cambridge-analytica-trump-campaign.html) - [‘I made Steve Bannon’s psychological warfare tool’: meet the data war whistleblower](https://www.theguardian.com/news/2018/mar/17/data-war-whistleblower-christopher-wylie-faceook-nix-bannon-trump) ] .pull-right[  ] --- background-image: url(https://heimdalsecurity.com/blog/wp-content/uploads/facebook-cambridge-analytica-scandal-explained-the-guardian-graphic.jpg) background-size: contain background-position: center, middle --- ## [You are the product](https://www.reddit.com/r/explainlikeimfive/comments/2m3f05/eli5_if_something_is_free_you_are_the_product/?st=jg6kk9bj&sh=a05019bc) .footnote[[Are you really the product?](https://slate.com/technology/2018/04/are-you-really-facebooks-product-the-history-of-a-dangerous-idea.html)] .pull-left[ <blockquote class="twitter-tweet"><p lang="en" dir="ltr">Bruce Schneier: . “Don’t make the mistake of thinking you’re Facebook’s customer, you’re not – you’re the product. Its customers are the advertisers.”</p>— Jake Tapper (@jaketapper) <a href="https://twitter.com/jaketapper/status/976473447374221313?ref_src=twsrc%5Etfw">March 21, 2018</a></blockquote> <script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script> ] -- .pull-right[  ] --- ## Aside: Leaving Facebook .footnote[[Do You Have a Moral Duty to Leave Facebook?](https://www.nytimes.com/2018/11/24/opinion/sunday/facebook-immoral.html)] .pull-left[  ] -- .pull-right[ - Amelia McNamara: [Deleting Facebook](https://www.amelia.mn/blog/misc/2019/12/29/Deleting-Facebook.html) ] --- ## [Criminal Sentencing](https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing)   --- ## [Racial prediction](https://github.com/kosukeimai/wru) ```r library(tidyverse) library(wru) predict_race(voter.file = voters, surname.only = TRUE) %>% select(surname, pred.whi, pred.bla, pred.his, pred.asi, pred.oth) ``` ``` ## [1] "Proceeding with surname-only predictions..." ``` ``` ## surname pred.whi pred.bla pred.his pred.asi pred.oth ## 4 Khanna 0.0676000 0.00430000 0.00820000 0.86680000 0.05310000 ## 2 Imai 0.0812000 0.00240000 0.06890000 0.73750000 0.11000000 ## 8 Velasco 0.0594000 0.00260000 0.82270000 0.10510000 0.01020000 ## 1 Fifield 0.9355936 0.00220022 0.02850285 0.00780078 0.02590259 ## 10 Zhou 0.0098000 0.00180000 0.00065000 0.98200000 0.00575000 ## 7 Ratkovic 0.9187000 0.01083333 0.01083333 0.01083333 0.04880000 ## 3 Johnson 0.5897000 0.34630000 0.02360000 0.00540000 0.03500000 ## 5 Lopez 0.0486000 0.00570000 0.92920000 0.01020000 0.00630000 ## 11 Wantchekon 0.6665000 0.08530000 0.13670000 0.07970000 0.03180000 ## 6 Morse 0.9054000 0.04310000 0.02060000 0.00720000 0.02370000 ``` .footnote[Imai, K. and Khanna, K. (2016). "[Improving Ecological Inference by Predicting Individual Ethnicity from Voter Registration Record](https://doi.org/10.1093/pan/mpw001)." *Political Analysis*, Vol. 24, No. 2 (Spring), pp. 263-272.] --- ## "Gaydar" .footnote[Wang, Y., & Kosinski, M. (2018). Deep neural networks are more accurate than humans at detecting sexual orientation from facial images. *Journal of Personality and Social Psychology*, 114(2), 246–257. https://doi.org/10.1037/pspa0000098] .pull-left[ - [The A.I. “Gaydar” Study and the Real Dangers of Big Data](https://www.newyorker.com/news/daily-comment/the-ai-gaydar-study-and-the-real-dangers-of-big-data) - [Why Stanford Researchers Tried to Create a ‘Gaydar’ Machine](https://www.nytimes.com/2017/10/09/science/stanford-sexual-orientation-study.html) ] .pull-right[  ] -- > the study consisted **entirely of white faces**, but only because the dating site had served up too few faces of color to provide for meaningful analysis. --- ## Algorithms reflect the biases of their creators .footnote[https://www.newyorker.com/news/daily-comment/the-ai-gaydar-study-and-the-real-dangers-of-big-data] > A piece of data itself has no positive or negative moral value, but the way we manipulate it does. It’s hard to imagine a more contentious project than programing **ethics into our algorithms**; to do otherwise, however, and allow algorithms to monitor themselves, is to invite the quicksand of **moral equivalence**. --- class: center, middle, inverse # Why do *we*</br>need to *teach*</br>*data science* ethics? --- ## "I'm just an engineer" <blockquote class="twitter-tweet" data-lang="en"><p lang="en" dir="ltr">Researchers build AI to identify gang members. When asked about potential misuses, presenter (a computer scientist at Harvard) says "I'm just an engineer." 🤦🏿 ♂️🤦🏿 ♂️🤦🏿 ♂️<a href="https://t.co/NbKepiaG4Y">https://t.co/NbKepiaG4Y</a> <a href="https://t.co/qp6f0okJ1g">pic.twitter.com/qp6f0okJ1g</a></p>— One Ring (doorbell) to surveil them all... (@hypervisible) <a href="https://twitter.com/hypervisible/status/970276540419395584?ref_src=twsrc%5Etfw">March 4, 2018</a></blockquote> <script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script> --- ## NAS report .footnote[National Academies of Sciences, Engineering, and Medicine (2018), [Data science for undergraduates: opportunities and options](http://sites.nationalacademies.org/cstb/currentprojects/cstb 175246)] > Ethics is a topic that, given the nature of data science, students should learn and practice **throughout their education**. Academic institutions should ensure that **ethics is woven into the data science curriculum** from the beginning and throughout. -- > The data science community should adopt a **code of ethics**; such a code should be affirmed by members of professional societies, included in professional development programs and curricula, and conveyed through educational programs. The code should be reevaluated often in light of new developments. --- class: center, middle, inverse # Concepts in data science ethics --- ## Something old, something new... .pull-left[ Old ethical notions: - *How to Lie with Statistics* - The Belmont Report (human subjects research) - reproducibility and replicability - p-hacking - conflicts of interest ] -- .pull-right[ Newer ethical notions: - **algorithmic bias** - web scraping (ToS) - doxxing - de-identifying personal data - re-identifying personal data ] -- > "These ethical areas are obviously informed by longstanding ethical principles, but are distinct in the way that computers, the Internet, and databases have transformed the way we live." .footnote[Baumer, Garcia, Kim, Kinnaird, Ott (under review), *Integrating data science ethics into an undergraduate major*] --- ## Algorithmic bias .footnote[https://en.wikipedia.org/wiki/Algorithmic_bias] > Algorithmic bias describes **systematic and repeatable errors** in a computer system that create unfair outcomes, such as privileging one arbitrary group of users over others. -- - Example: recall the criminal sentencing algorithm --- ## [Doxxing](https://en.wikipedia.org/wiki/Doxing) > Doxing or doxxing (from dox, abbreviation of documents) is the Internet-based practice of researching and broadcasting private or identifying information (especially **personally identifying information**) about an individual or organization. -- > Doxing may be carried out for various reasons, including to aid law enforcement, business analysis, risk analytics, extortion, coercion, inflicting harm, harassment, online shaming, and vigilante versions of justice. -- - Example: [OkCupid](https://fortune.com/2016/05/18/okcupid-data-research/) .footnote[Kirkegaard, Emil OW, and Julius D Bjerrekær. 2016. “The OKCupid Dataset: A Very Large Public Dataset of Dating Site Users.” *Open Differential Psychology* 46.] --- ## Re-identification .footnote[https://research.neustar.biz/2014/09/15/riding-with-the-stars-passenger-privacy-in-the-nyc-taxicab-dataset/] .pull-left[  ] .pull-right[ > In Brad Cooper’s case, we now know that his cab took him to Greenwich Village, possibly to have dinner at Melibea, and that he paid $10.50, with *no recorded tip*. ] -- > Ironically, he got in the cab to escape the photographers! --- class: center, middle, inverse # What are we doing about it? --- ## Raising awareness .pull-left[  ] .pull-right[ - [ACM Conference on Fairness, Accountability, and Transparency (ACM FAT*)](https://fatconference.org/index.html) - National Academies of Sciences, Engineering, and Medicine (2018), [Data science for undergraduates: opportunities and options](http://sites.nationalacademies.org/cstb/currentprojects/cstb 175246) ] --- ## Recent speakers at Smith .pull-left[  ] .pull-right[  ] --- ## [Algorithmic Justice League](https://www.ajlunited.org/) <div style="max-width:854px"><div style="position:relative;height:0;padding-bottom:56.25%"><iframe src="https://embed.ted.com/talks/joy_buolamwini_how_i_m_fighting_bias_in_algorithms" width="854" height="480" style="position:absolute;left:0;top:0;width:100%;height:100%" frameborder="0" scrolling="no" allowfullscreen></iframe></div></div> --- ## [Data 4 Black Lives](http://d4bl.org/conference.html) <blockquote class="twitter-tweet"><p lang="en" dir="ltr">On our way to registration at <a href="https://twitter.com/hashtag/Data4BlackLives?src=hash&ref_src=twsrc%5Etfw">#Data4BlackLives</a> ready to get this started! <a href="https://t.co/xFU0yCt9zu">pic.twitter.com/xFU0yCt9zu</a></p>— Smith College SDS (@SmithCollegeSDS) <a href="https://twitter.com/SmithCollegeSDS/status/1083860370228432896?ref_src=twsrc%5Etfw">January 11, 2019</a></blockquote> <script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script> --- ## Codes of ethics .pull-left[ - [Data Values and Principles](https://datapractices.org/manifesto/) - [National Academy of Sciences Hippocratic Oath](https://www.nap.edu/read/25104/chapter/13) ] .pull-right[  ] .footnote[https://medium.com/@dpatil/a-code-of-ethics-for-data-science-cda27d1fac1]